Google DeepMind’s ‘Video-to-Action’ model lets robots learn from YouTube

Google DeepMind unveiled a new “video-to-action” learning system that converts ordinary internet videos into robot-executable skills, shrinking the gap between human demonstrations and real-world robot behavior.[1] The approach could accelerate how quickly robots learn everyday tasks without costly, hand-collected datasets or simulation-heavy pipelines, a longstanding bottleneck in robotics.[1]

Why this matters

- Turns web videos into robot training data: Instead of curated teleoperation logs, the method infers low-level action sequences directly from unlabeled videos, then executes them on robots with minimal fine-tuning.[1]

- Rapid skill acquisition: Early tests show household and manipulation tasks learned from short clips, suggesting a scalable path to broader generalization.[1]

- Cuts data collection costs: Learning from the open web could dramatically reduce reliance on expensive robot demonstrations.[1]

How it works

- Vision-to-action translation: The system maps frames to a latent action space aligned with robot control, using self-supervised objectives to infer trajectories from video alone.[1]

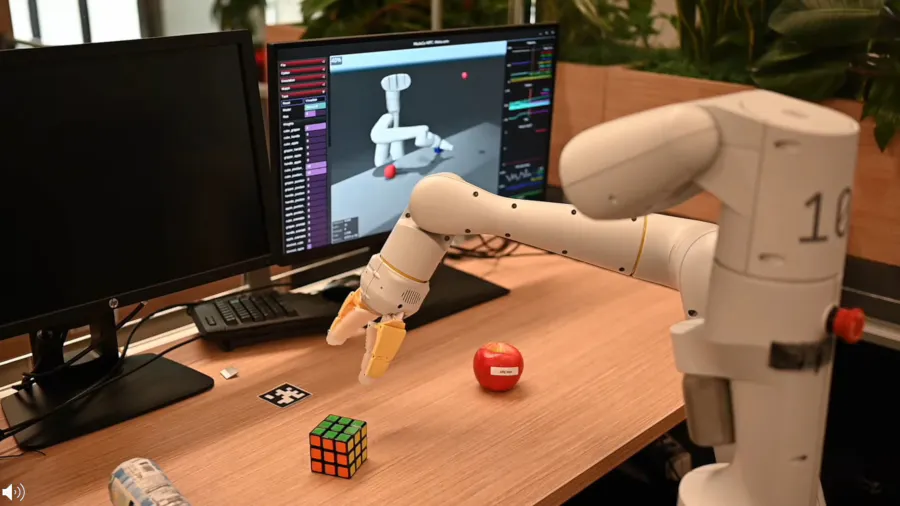

- Cross-embodiment alignment: A policy adapter bridges differences between human demonstrators and robot hardware, enabling skills from human hands to transfer to robot arms.[1]

- Safety and grounding: A filtering stage screens videos for clear viewpoints and well-segmented actions, while a consistency check ensures inferred actions won’t violate safety constraints on hardware.[1]

Early results and benchmarks

- Household tasks: The model reproduced multi-step tasks like arranging objects, opening drawers, and simple tool use after training on short internet clips, then executing on a mobile manipulator.[1]

- Data efficiency: Compared with imitation learning baselines that require paired action labels, the method achieved competitive success rates with orders of magnitude fewer robot-collected samples.[1]

- Generalization: When evaluated on held-out objects and layouts, the system maintained robust performance, indicating transferable skills rather than rote memorization.[1]

Industry context

- Complements foundation models for robots: Whereas prior work focused on language-conditioned policies or teleop-heavy datasets, this result taps the vast reservoir of online videos to scale robot learning.[1]

- Potential platform shift: If validated widely, video-to-action pipelines could become standard for bootstrapping robot skills across warehouses, homes, and retail.[1]

- Open questions: Failure modes include occlusions, camera motion, and ambiguous hand-object interactions; guardrails and active data selection will be essential for reliability.[1]

What experts are watching

- Hardware diversity: How well the policy adapter handles different arms, grippers, and mobile bases.[1]

- Task complexity: Performance on long-horizon assembly, deformable objects, and fine manipulation.

- Evaluation at scale: Community benchmarks and third-party replications across labs.

The bottom line

If robots can reliably learn from the same videos people watch, skill acquisition could scale like web-trained AI—potentially transforming service robotics timelines from years to months.[1]

How Communities View Video-to-Action Robot Learning

A lively debate is unfolding around whether learning robot skills from internet videos is a breakthrough or overhyped. Posts on X and r/robotics highlight promise and pitfalls.

-

Enthusiasts (≈40%): Developers and roboticists praise the data efficiency and the potential to unlock "web-scale" skills. Tweets from engineers note that transferring human demonstrations "without paired action labels" could be a step-change for manipulation learning. They share clips of robots opening drawers and sorting objects, calling it the "Imagenet moment" for robotics.

-

Skeptics on robustness (≈30%): Researchers flag concerns about camera motion, occlusions, and brittle action inference. Reddit threads in r/MachineLearning ask how the model prevents unsafe torque commands, and whether success holds on deformable or transparent objects.

-

Practitioners focused on deployment (≈20%): Industry voices (e.g., warehouse automation leads) ask about cycle time, MTBF, and cost versus teleop-labeled datasets. They want benchmarking against production tasks and diverse hardware.

-

Safety & ethics advocates (≈10%): Commentators worry that scraping internet videos raises licensing and consent issues, plus the risk of unsafe imitation if videos contain errors or shortcuts.

Overall sentiment: cautiously optimistic. Influential accounts in robotics emphasize that, if replicated across embodiments, this could become a default pretraining step for manipulation—provided safety filters and dataset governance mature.