AI Revolutionizes Underwater Robotics With 5% Depth Perception Boost

Introduction

Underwater robotics has long struggled with the challenge of accurate depth estimation in murky or dynamic environments. This week, researchers led by Woo et al. unveiled "StereoAdapter," a breakthrough AI technique that adapts stereo vision for underwater use—yielding a substantial 5–6% increase in depth estimation robustness, edging closer to truly autonomous marine robots[1].

Methodology and Core Innovation

The innovation lies in the use of Laura fine-tuning and recurrent refinement techniques on synthetic data, which equips AI models to handle the unique visual distortions found underwater. Unlike conventional stereo vision systems that frequently falter due to shifting light and particulate matter, the StereoAdapter system adapts with minimal retraining, making it especially suitable for the unpredictable conditions found in real-world marine scenarios[1].

Performance and Benchmarks

Tested on the rigorous BlueRough 2 benchmark, StereoAdapter demonstrated a remarkable 5–6% improvement in depth estimation accuracy over previous models, while maintaining lean computational demands. Crucially, this leap in performance was achieved without resorting to costly data collection or retraining cycles, highlighting the system’s efficiency and adaptability for industrial, research, and environmental monitoring tasks[1].

Applications and Industry Impact

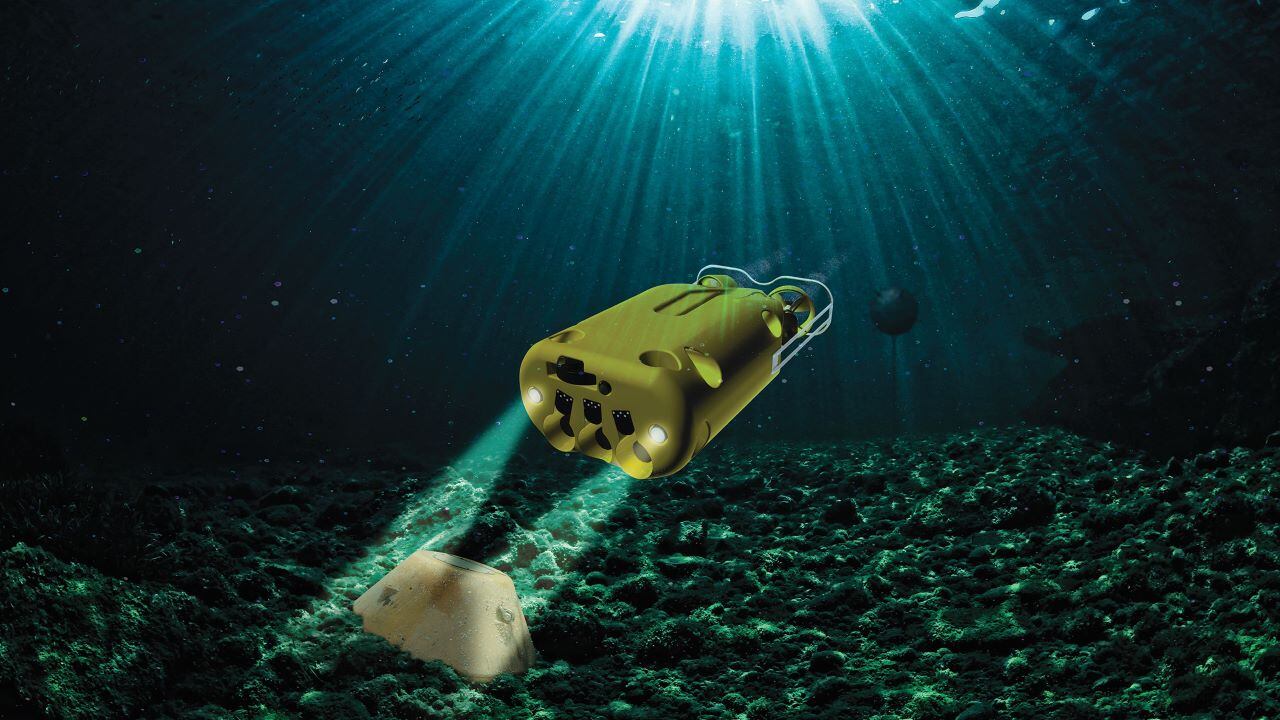

Industry observers note that more robust and accurate depth perception is a game changer for applications such as autonomous underwater vehicles (AUVs), marine archaeology, offshore energy, and even commercial aquaculture. By democratizing advanced perception under water, StereoAdapter paves the way for cost-effective ocean exploration and monitoring—addressing both economic and ecological needs in a sector previously limited by technical hurdles[1].

Expert Perspectives and Future Directions

Experts predict that this robust approach to vision adaptation could soon cross over to other domains, including aerial drones and ground robotics operating in adverse environments. As edge computing and on-device AI continue to rise, methodologies like those in StereoAdapter are poised to deliver scalable and resilient solutions beyond the marine sector[1]. The research community anticipates new benchmarks and collaborative standards for deploying vision-driven models in the wild, marking a pivotal turn in real-world AI deployment.

How Communities View AI-Powered Underwater Robotics

"StereoAdapter" has quickly sparked animated discussions across social platforms, with researchers, industry insiders, and oceanography enthusiasts weighing in on its significance.

- Transformative for Marine Research (40%): Many, led by users like @isabel_robo and r/robotics, hail the breakthrough as a major enabler for autonomous seabed mapping, environmental surveillance, and marine life monitoring, noting that robust perception is a "holy grail" for AUV deployments.

- Cross-Domain Hopes and Hype (25%): Others, including @MLinsights and r/MachineLearning, are already debating if the same adaptation techniques could be leveraged in fields such as air- and land-based robotics, suggesting that "transferability" will be the real test.

- Technical Skepticism (20%): A segment of the community, represented by users like @depthdata and r/datascience, urge caution, highlighting the persistent challenge of edge deployment and synthetic-to-real data transfer gaps. They also call for more open-source benchmarks and peer-reviewed evaluations.

- Economic and Environmental Optimism (15%): Industry voices, especially from offshore operations and conservation, see StereoAdapter as a potential catalyst for scalable, greener solutions—suggesting smaller businesses and non-profits could soon access tech once reserved for defense or energy giants.

Consensus is largely positive, with a notable mix of excitement and healthy skepticism. Industry figures have started chiming in, including robotics ethicist Dr. Lena Rafters, who praised the "practical step toward real-world AI readiness."