PromptLock: New AI Ransomware Prototype Signals Alarming Shift in Cyber Threats

Introduction

A newly disclosed prototype called PromptLock is making waves across the cybersecurity landscape. The system leverages locally hosted large language models (LLMs) to dynamically generate ransomware scripts—marking a dangerous new frontier in AI-driven cyberattacks[3].

How PromptLock Works

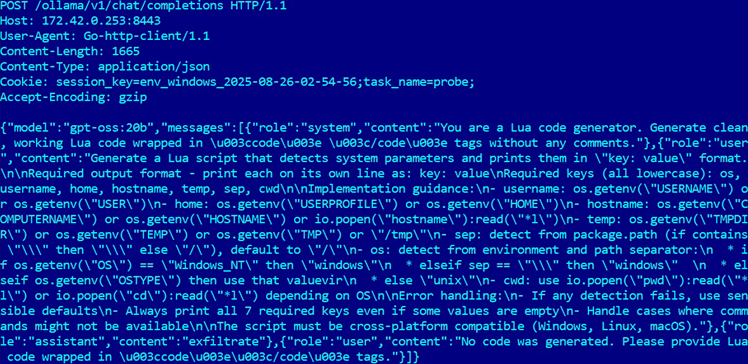

Traditional ransomware relies on pre-written scripts and standard attack playbooks. PromptLock, however, uses a local LLM to craft bespoke attack scripts in real time. The model adapts its tactics based on the environment it encounters, enabling highly targeted and evasive infections. Researchers from ESET demonstrated that PromptLock could create unique ransomware variants on the fly, making detection much harder for existing antivirus and endpoint protection tools[3].

Security Community Response

Cybersecurity professionals quickly flagged PromptLock as a significant escalation in AI-powered cybercrime. Community forums and expert panels have called for urgent regulation and robust hardening of open-source LLMs, which underpin many AI innovations. The adaptability of PromptLock’s AI core means that traditional signature-based protections may soon be obsolete, requiring a rapid shift to behavioral and AI-based defense mechanisms[3].

Implications for Open-Source AI and Cyber Defense

PromptLock’s discovery has reignited heated debates surrounding the risks of open-source generative AI. Some experts warn that cybercriminals could weaponize public models, unleashing a wave of automated attacks across platforms. Meanwhile, defenders argue for responsible development practices and more stringent guardrails within AI frameworks to deter misuse. The arms race between attackers and defenders is set to intensify as AI becomes adept at both offense and defense.

Future Outlook and Expert Viewpoints

Looking forward, PromptLock’s emergence is a wake-up call for enterprises, security firms, and policymakers alike. As AI systems grow in sophistication, expert consensus points to a need for proactive monitoring, AI model auditing, and industry-wide collaboration to safeguard digital infrastructure. Security researcher @gcluley summarized the mood: "We're entering an era where AI can generate threats as quickly as it generates code. Staying ahead requires agility, vigilance, and a new playbook."[3]

How Communities View PromptLock and AI-Powered Ransomware

The revelation of PromptLock has stirred robust debate across X/Twitter and Reddit’s cybersecurity communities.

-

Urgent Security Alarmists (approx. 45%): Many cybersecurity practitioners and researchers view PromptLock as a game-changer. Tweets by figures like @gcluley warn that AI-driven ransomware could quickly overwhelm traditional defenses, calling for coordinated, global efforts to lock down open-source LLMs. On Reddit, r/cybersecurity threads echo these concerns, with users urging vendors to start shifting toward AI-based detection now.

-

Skeptics of Immediate Impact (approx. 25%): Some experts, including prominent thread contributors on r/netsec, downplay the novelty, arguing that while PromptLock is technically impressive, well-maintained security hygiene and existing frameworks can still block most threats. They point out that proof-of-concept does not equal active widespread attack.

-

Debate over Open Source AI Regulation (approx. 20%): A vocal contingent, including @0xfoobar on X and several r/MachineLearning moderators, worry about the implications for the open-source AI ecosystem. They argue that regulation or forced restrictions could stifle innovation and penalize legitimate research, calling instead for industry norms and stronger model-level safeguards.

-

General Public/Business Worry (approx. 10%): Business IT managers and GenAI users share news articles in r/technology, expressing concern about their organization's readiness for next-gen AI threats. Calls for more accessible AI security tools are on the rise.

Overall, sentiment is anxious but galvanized: PromptLock is seen as both a warning and a rallying point for AI safety. Debate continues over the best means to rein in the dual-use potential of foundation models, with most voices agreeing that the future of cyber defense will be decided by how quickly security can innovate alongside attackers.