AToken Unifies Image, Video, and 3D AI Into a Single Vision Model

AToken: The Universal Visual Tokenizer Shaping Next-Gen AI

A groundbreaking new model called AToken is rapidly reshaping how artificial intelligence interprets our visual world. Released in September 2025, AToken introduces a unified, transformer-based architecture that can natively encode images, videos, and even 3D data into a shared representation space, enabling true multimodal reasoning and analysis[2].

Why AToken Matters Now

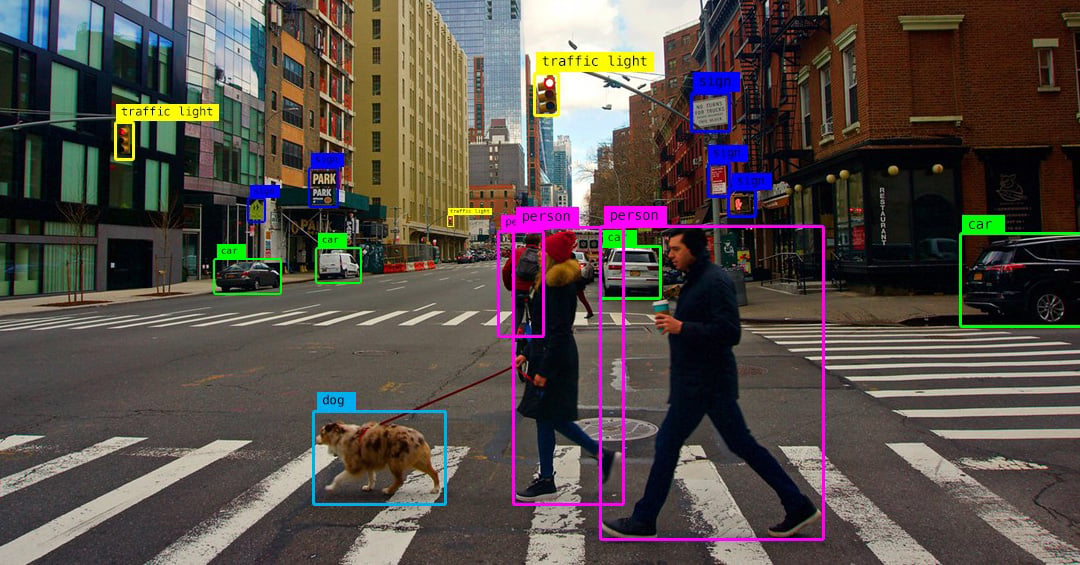

As AI rapidly integrates with daily life—from smartphones to autonomous vehicles—the need for systems that seamlessly handle different visual formats is growing. Traditional models compartmentalize: image models for photos, video models for motion, and so on. AToken bridges these silos, making it possible for a single AI to analyze, relate, and cross-reference visual data of any kind. This leap could power everything from smarter robots to unified medical imaging tools[2].

Key Technical Innovations

- Transformer Backbone: Adapts the success of transformer models, allowing flexible handling of various input types.

- Shared Embedding Space: Learns representations that are meaningful across images, videos, and 3D scenes, boosting model versatility.

- Efficiency Boost: Incorporates token pruning and mixture-of-experts—methods that focus compute power where it matters, allowing the model to work on high-res and complex data without bogging down performance[2].

Recent research spotlights AToken's robust performance on new benchmarks. For instance, it excels at multidisciplinary movie question answering (Cinéaste) and can flexibly shift between different tasks with minimal retraining[2].

Security, Trust, and Real-World Reach

Alongside its capabilities, AToken is part of a wave of new models emphasizing trustworthiness and robustness. Advanced domain adaptation and test-time augmentation techniques make it resilient to changes in lighting, sensor, or context. Early testing in domains from remote sensing to medicine underscores its broad potential.

Experts believe AToken, and models like it, signal a "generalist future" for AI vision—where systems learn like humans, drawing meaning across varied sensory inputs. As visual data explodes (over 500 hours of video are uploaded to YouTube every minute), such flexibility may soon be as essential to artificial intelligence as language is to people[2].

Looking Ahead: Universal Vision, Real-World AI

AToken's debut has triggered excitement across the computer vision field, drawing comparisons to major shifts like the arrival of word2vec in language AI. Challenges remain—fine-grained reasoning and human-level intuition are still out of reach on some benchmarks—but momentum is building for a new generation of universal visual models. While further validation and large-scale trials are needed, researchers predict that by 2026, frameworks derived from AToken could power everything from augmented reality assistants to advanced security systems and next-level creative tools.

Industry experts and open source contributors alike are closely watching how fast AToken's multimodal approach will transition from the lab to commercial products. As the lines blur between images, videos, and 3D scenes, one thing is clear: the era of truly unified vision AI has begun[2].

How Communities View AToken’s Universal Visual Tokenizer

A lively debate is emerging across X/Twitter and Reddit's computer vision forums over AToken’s breakthrough.

-

1. Enthusiastic Practitioners (≈40%): Many AI engineers and researchers (@theophilusCV, @visionNN) are cheering AToken as a milestone. On r/MachineLearning, posts highlight its seamless ability to handle images, video, and 3D data as a real enabler for generalist AI, with community members sharing hopes for accelerated robotics, AR, and healthcare applications.

-

2. Skeptical Experts (≈25%): Some computer vision veterans (@cogvis, @karpathy) urge caution, noting that benchmarks like Cinéaste and GenExam show current models (including AToken) still make basic reasoning errors. They question the hype and ask for more evidence across edge cases before full-scale deployment.

-

3. Application Innovators (≈20%): Startups and applied AI developers are brainstorming on r/ComputerVision about using AToken for fast film analysis, smarter security cams, and next-gen gaming engines. These posts are practical: concerns focus on computational cost and fair use of pretrained models.

-

4. Privacy & Ethics Advocates (≈15%): A growing minority, including digital rights orgs and academics (@aiwatchdogs), raise concerns about the risks of such powerful vision AI being misused for surveillance or deepfake synthesis, sparking threads about possible regulation.

Overall, the sentiment is cautiously optimistic. While practitioners are excited by the progress, most community leaders agree that robustness, ethics, and real-world testing will decide if AToken’s unified model becomes the new foundation—or just another research curiosity.