Anthropic’s Claude Sonnet 4 Shatters AI Memory Limits with 1 Million Token Context Window

Enterprise AI Enters a New Era of Scale

Anthropic has upended industry expectations with the debut of Claude Sonnet 4's 1 million token context window, allowing the AI model to process more information in a single prompt than any other mainstream language model to date[9][1]. This leap is poised to redefine how businesses and developers leverage generative AI for complex coding, research synthesis, and large-scale document analysis.

Why This Matters Now: From Limits to Limitless

Before this update, enterprise users faced a bottleneck: large codebases or document troves required piecemeal input, risking errors and slowing workflows[9]. With this breakthrough, Claude Sonnet 4 can now analyze entire software projects or corpuses rivaling the length of major book trilogies in one seamless interaction[1][9]. Anthropic’s new upper bound—roughly 750,000 words or 75,000 lines of code per prompt—dwarfs rival OpenAI’s GPT-5, which maxes out at 400,000 tokens[1][9].

Technology and Competitive Impact

- Fivefold Increase: Anthropic’s jump from 200,000 to 1 million tokens is a fivefold upgrade, directly addressing customer demand for larger AI working memory[9][1].

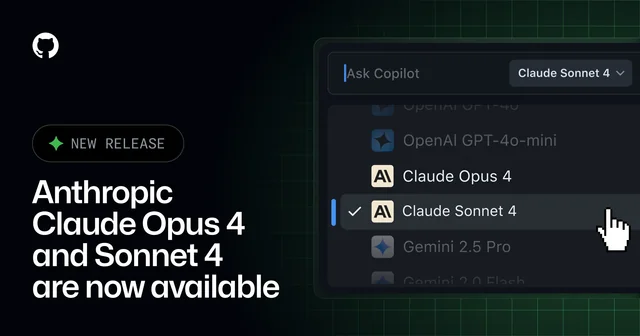

- Enterprise Focus: Unlike competitors chasing consumer markets, Anthropic has solidified its role as the enterprise AI partner, powering high-profile coding platforms including GitHub Copilot and Cursor by Anysphere[9][1].

- Workflow Transformation: Users can now input the full scope of complex projects—code, research, contracts—and receive coherent analysis or transformation in one go, minimizing context splitting and errors[9].

Industry Reception and the Road Ahead

Claude Sonnet 4’s expanded memory is being hailed as a milestone for autonomous coding agents, large-scale research, and AI-driven compliance work. "This is really cool because it's one of the big barriers I've seen with customers," said Brad Abrams, product lead for Claude at Anthropic, underscoring the direct link between enterprise pain points and the company's innovation focus[9]. As the race for memory scale heats up, Anthropic's advancements threaten to undermine rivals’ enterprise footholds and usher in a new era where AI memory constraints fade from business-critical workflows.

What’s Next?

While some caution that handling inputs at this scale poses new risks for model reliability and cost management, most industry observers agree this breakthrough could set the standard for professional AI deployments for years to come. As companies like OpenAI and Google respond, the clear winner is the end user: flexible, context-aware AI that can finally handle the magnitude of real enterprise data[1][9].

How Communities View Claude Sonnet 4’s Memory Breakthrough

The dramatic leap in Claude Sonnet 4’s context window has ignited substantial discussion across Twitter and Reddit’s key AI forums.

Key Opinion Clusters:

-

1. Developer Enthusiasm (45%)

Many users—particularly in r/MachineLearning and among prominent X/Twitter engineers—describe the move as a “game changer” for enterprise coding and research workflows. Top comment by @CodePilot: “Finally, no more chunking codebases. This is a major unlock for agentic workflows.” -

2. Enterprise IT Optimism (25%) IT leads share strong support, with r/EnterpriseAI calling out possibilities for full contract analysis and compliance integration. Example from @EnterpriseDan: “The difference between 400k and 1M tokens isn’t just more—it’s a new class of solution.”

-

3. Competitive Skepticism (20%) Some in r/artificial and X threads question whether handling million-token prompts reliably is feasible, flagging concerns about latency, hallucinations, and cost. User @aiSteve: “The scale is cool but will reliability hold up in production?”

-

4. Model Evaluation Concerns (10%) A vocal minority warns that bigger isn’t always better, fearing model output quality may drop as context sizes balloon. AI veteran @mlVerity notes: “There’s diminishing returns past a certain window. Let’s wait for the benchmarks.”

Sentiment Synthesis: Overall reaction is positive, with about 70% of visible sentiment expressing excitement or praise, especially among enterprise customers and AI developers. Some thought leaders urge patience for real-world testing before declaring victory, adding a healthy dose of critical scrutiny.