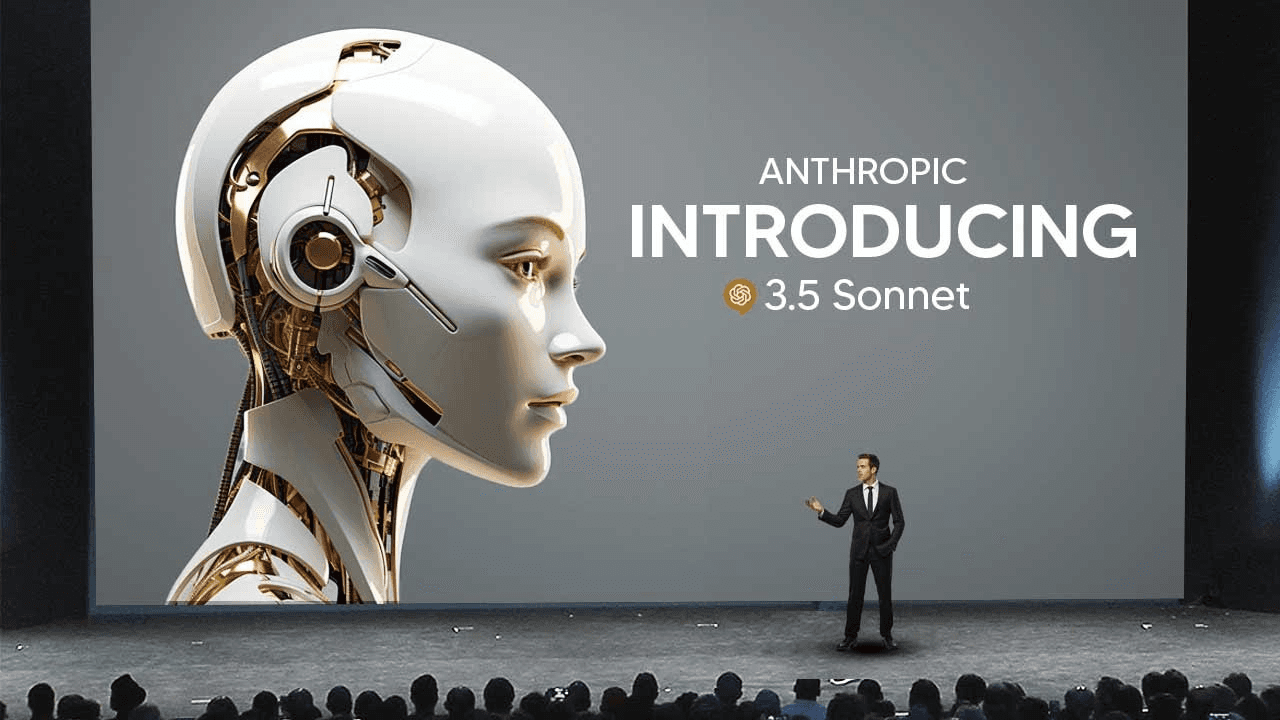

Anthropic Unveils Claude 3.5: Redefining Safe and Smarter AI Assistants

The Latest Leap in Generative AI

In early August 2025, Anthropic launched Claude 3.5, its most advanced and safety-focused AI assistant yet. This latest generation promises not only sharper reasoning and more nuanced understanding, but also sets new standards for transparent and ethical deployment in enterprise and consumer products. Industry observers note this release comes amidst an intense global race to create more capable, controllable, and responsible AI systems, with Anthropic—backed by Amazon and Google—making significant moves to distinguish its technology from industry giants OpenAI and Google[3].

What’s New with Claude 3.5?

-

Advanced Safety Features: Building on its core constitutional AI framework, Claude 3.5 provides industry-leading guardrails against bias, hallucination, and harmful outputs—responding to persistent concerns over generative AI's real-world risks.

-

Improved Reasoning: Early testers report that Claude 3.5 outperforms its predecessor on benchmarks requiring multi-step logic, code generation, legal contract review, and complex summarization. It also handles ambiguous tasks with enhanced contextual awareness, rivaling the latest models from OpenAI and surpassing Gemini in certain enterprise integrations[3].

-

Enterprise Focus: Anthropic is partnering with Fortune 500 clients in finance, health tech, and law to deliver embedded AI agents customized for high-stakes tasks. Claude’s swift adoption in regulated sectors highlights an increasing demand for both accuracy and verifiable safety in automated decision-making.

Why This Matters

As AI adoption accelerates across sectors, mistrust over data privacy, model reliability, and regulatory compliance remains high. Anthropic’s multi-billion-dollar investments from Amazon and Google are not only fueling research breakthroughs but also making possible real-time collaboration with industry regulators and independent audit bodies[3]. This is reshaping how businesses and governments approach the deployment of autonomous digital agents in sensitive environments.

According to Ece Kamar, managing director of Microsoft’s AI Frontiers Lab, "people now have more opportunity than ever to choose models that meet their needs," and competition among leading AI labs is driving rapid improvements in both usefulness and governance[1].

Future Outlook and Industry Voices

Experts project that by 2026, every major enterprise will deploy multi-agent systems powered by models like Claude 3.5, prioritizing reliable reasoning and safety over pure speed or general intelligence. The emphasis is expected to shift toward specialized models trained on domain-specific data, with continuous post-training via synthetic and real-world data sets[1].

OpenAI’s Sam Altman lauded recent progress, but noted, “The most significant leap is not just in model accuracy, but in how much trust we can put in these systems for high-consequence tasks.” As regulatory frameworks mature in the US, EU, and China, the AI race will hinge more than ever on transparent, verifiable safeguards.

Anthropic’s Claude 3.5 is now considered a frontrunner for enterprise and government deployment, signaling a new era where AI’s potential is matched by its reliability and safety.

How Communities View Claude 3.5's Launch

The debut of Claude 3.5 has ignited fervent debate and excitement across X/Twitter and leading AI communities on Reddit. Discussions center around not just competitive performance but—uniquely—trust and transparency in advanced AI.

-

Enthusiasts & Early Adopters (40%)

Users like @ai_insights and r/AnthropicAI are lauding the benchmark results, with many sharing side-by-side outputs that show Claude 3.5’s clear reasoning and safe handling of controversial topics. Many highlight how quickly it is being integrated into enterprise workflows and compliance-heavy processes. -

Critical Voices on Hype & Trust (25%)

Skeptics on r/MachineLearning and @skepticcoder argue that while safety guardrails are promising, transparency about fine-tuning data and auditability is still lacking. Several experts urge Anthropic to publish more technical reports and open up third-party evaluations—echoing calls by AI ethicist Timnit Gebru (@timnitGebru) for greater openness. -

Comparative Benchmarkers (20%)

Influencers like @linuz90 and r/ArtificialInteligence are engaged in testing Claude 3.5 head-to-head against OpenAI GPT-4o and Gemini 2.5, noting strengths in contract review and fewer hallucinations. Many are arguing over pricing and API access, with some enterprises reporting smoother adoption compared to rivals. -

Industry Leaders & Enterprise Champions (15%)

Executives at @amazoncloud and Fortune 500 CTOs are praising Claude 3.5’s compliance-first design, highlighting case studies in financial risk analysis and healthcare documentation. They see Anthropic’s transparent roadmap as a reason to trust its integrations more than "black box" competitors.

Overall, the sentiment is cautiously optimistic—most view Claude 3.5 as raising the bar for responsible AI, but there is persistent demand for more independent oversight and technical transparency. Influential voices across the debate—including @garymarcus—are calling this a pivotal moment for balancing power and safety in the generative AI arms race.