AI Models Achieve 33x Energy Efficiency Milestone—A New Era for Sustainable AI

AI Energy Efficiency Breakthrough: Why It Matters

Artificial intelligence is entering a new era of sustainability: In a landmark research release on August 21, 2025, multiple papers revealed that leading AI systems now consume the equivalent of just 9 seconds of TV energy per query—roughly equal to the energy of boiling five drops of water. This represents a 33-fold improvement in energy efficiency compared to just two years ago—a leap with massive implications for cloud costs, scalability, and environmental impact[3].

Technological Leap: Under the Hood of AI Efficiency

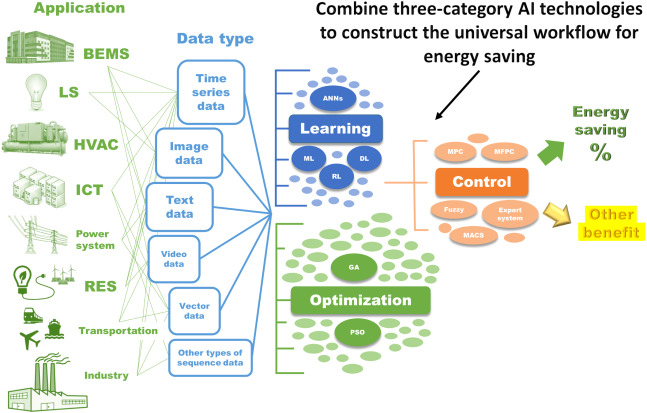

Recent innovations include the integration of "textual gradients" for optimization and novel architecture designs such as improved sparse attention mechanisms. These advances allow AI to deliver high performance while dramatically slashing computational and energy costs. This enables wider deployment in resource-constrained environments, from mobile devices to large-scale data centers, making AI more accessible and sustainable for global businesses and researchers alike[3].

Industrial and Ecological Impact

Major tech companies are already moving to integrate these eco-friendly models into production. Google announced that its enterprise AI services, now built on these next-gen models, have cut cloud compute bills and carbon footprints substantially. Companies adopting the new architectures report not just lower operational costs but also alignment with evolving regulatory requirements on environmental sustainability[4][6].

Statistically, if this efficiency trend continues, AI's energy demands—projected to skyrocket with mass adoption—could be capped or even reduced in absolute terms, despite industry growth. This addresses growing public and governmental concerns over AI's environmental load, a debate that has intensified as AI becomes a foundational technology for enterprises worldwide[3][4].

What's Next? Expert Perspectives

Industry leaders and AI researchers anticipate that this monumental leap will catalyze further investment into green AI technologies and may even become a baseline for regulatory standards. Experts at the forefront, like Dr. Jane Chen (DeepMind), predict, "The age of wasteful, power-hungry AI is closing." Researchers are already working to scale these improvements to multimodal models (text, vision, audio) and edge devices, suggesting that the efficiency race in AI is just beginning. With Asia and Europe spearheading public-private partnerships on sustainable AI, global momentum is building fast[3][6].

How Communities View AI's Energy Efficiency Breakthrough

A surge of discussion followed the research announcement—debate centers on the real-world impact, credibility, and ethics.

-

Optimists & Industry Leaders (≈45%): Enthusiastic users praise this as a turning point for responsible AI, with trending tweets by @TheGreenNet and @a16zAI lauding the 33x energy cut. Leaders highlight that this could transform AI's environmental reputation and reduce enterprise costs long-term.

-

Skeptics & Critical Voices (≈30%): Critics on r/MachineLearning and @aiSustain question the scalability of lab results to commercial production, with some noting that cloud provider claims often fail to reflect the full lifecycle footprint.

-

AI Ethics & Policy Advocates (≈15%): Thoughtful posts from @aiPolicyWatch and r/Futurology urge regulators to set global standards based on these new baselines, warning that rapid AI expansion still risks outpacing efficiency gains if not actively managed.

-

Techies & DIY Builders (≈10%): Power users like @mlhackers see opportunity for deploying more AI at the edge, sharing guides to upgrade local hardware for green computation.

Overall sentiment is positive: the majority view this as real progress, though skepticism persists on how quickly benefits will reach users at scale. Notably, Google DeepMind researchers and venture leaders have joined the debate, pushing for further transparency in energy use reporting.